maths for AI and ML

1. Linear Algebra for Machine Learning

- Vectors and Matrices

- Operations: addition, multiplication, transpose

- Use in data representation

- Matrix Multiplication and Linear Transformations

- Determinants and Inverses

- Eigenvalues and Eigenvectors

- PCA and dimensionality reduction

- Applications:

- Word embeddings (word2vec, GloVe)

- Neural network forward and backward propagation

2. Calculus for Optimization

- Limits and Continuity

- Derivatives and Gradients

- Partial derivatives

- Gradient vectors

- Chain Rule

- Backpropagation in neural networks

- Optimization

- Gradient descent

- Learning rate intuition

- Applications:

- Training deep neural networks

- Cost/loss function minimization

3. Probability and Statistics

- Basic Probability Rules

- Bayes’ Theorem

- Conditional probability

- Probability Distributions

- Gaussian, Bernoulli, Binomial, etc.

- Expectation and Variance

- Maximum Likelihood Estimation (MLE)

- Hypothesis Testing

- Applications:

- Naive Bayes classifiers

- Probabilistic models like HMMs, Bayesian networks

4. Discrete Mathematics & Logic

- Set Theory and Functions

- Graphs and Trees

- Boolean Logic

- Applications:

- Decision trees

- Knowledge representation in AI

5. Information Theory

- Entropy and Information Gain

- KL Divergence

- Cross-Entropy Loss

- Applications:

- Decision trees, neural networks, regularization

6. Numerical Methods

- Root Finding (e.g., Newton-Raphson)

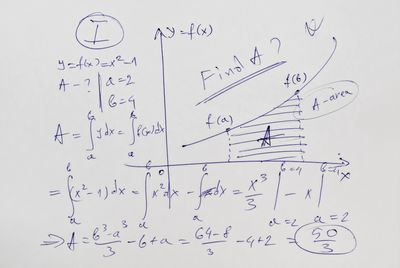

- Numerical Differentiation and Integration

- Linear Solvers (LU decomposition, etc.)

- Applications:

- Solving equations in model training

7. Advanced Topics

- Convex Optimization

- Manifold Learning

- Topology Basics (for advanced deep learning)

This website uses cookies.

We use cookies to analyze website traffic and optimize your website experience. By accepting our use of cookies, your data will be aggregated with all other user data.