Linear algebra

1. Introduction to Linear Algebra

- What is linear algebra and why it's important in AI/ML

- Real-world examples:

- Images as matrices

- Word vectors in NLP

- Feature spaces in ML

2. Scalars, Vectors, Matrices, and Tensors

- Scalars (0D), Vectors (1D), Matrices (2D), Tensors (nD)

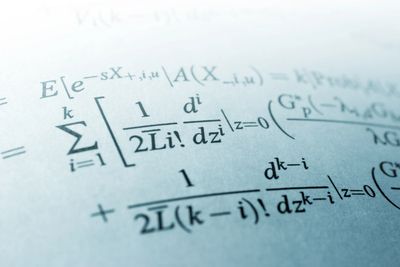

- Notation and representation

- Visualizing vectors and matrices (2D/3D)

- Python/Numpy exercises: Creating and manipulating arrays

3. Vector Operations

- Addition, subtraction

- Scalar multiplication

- Dot product (inner product)

- Geometric interpretation: cosine similarity

- Cross product (optional, for 3D)

- Vector norms (L1, L2)

- Unit vectors and normalization

- ML Application: Cosine similarity in NLP, clustering

4. Matrix Operations

- Matrix addition, multiplication

- Transpose

- Identity and zero matrices

- Symmetric matrices

- Matrix indexing in Python

- ML Application: Batch processing of data samples

5. Linear Combinations & Span

- What is a linear combination?

- Span of vectors

- Linear dependence vs. independence

- ML Connection: Feature combinations, basis vectors

6. Systems of Linear Equations

- Representing systems as matrices

- Row echelon form and Gaussian elimination

- Existence and uniqueness of solutions

- ML Application: Solving weights in linear regression

7. Matrix Inverse and Determinant

- When does a matrix have an inverse?

- Determinants and what they tell us (singularity)

- Computing inverse and determinant (manually & in NumPy)

- ML Application: Solving linear models, understanding data invertibility

8. Rank and Null Space

- Matrix rank (full rank vs. rank-deficient)

- Null space and solution space

- Applications in data compression, PCA

9. Eigenvalues and Eigenvectors

- What are they and why they matter

- Diagonalization

- Spectral decomposition

- ML Application: Principal Component Analysis (PCA), stability in RNNs

10. Singular Value Decomposition (SVD)

- Matrix decomposition

- Low-rank approximation

- ML Application: Latent semantic analysis (NLP), collaborative filtering

11. Projections and Orthogonality

- Projection of vectors

- Orthogonal and orthonormal vectors

- Gram-Schmidt process

- ML Application: Linear regression as projection onto feature space

12. Linear Transformations

- Definition and matrix representation

- Rotation, scaling, shearing

- Visualizations in 2D

- ML Application: Understanding layers in neural nets as transformations

13. Principal Component Analysis (PCA)

- Dimensionality reduction

- Eigen decomposition of covariance matrix

- Visualization with real datasets (Iris, MNIST)

- ML Application: Feature reduction, noise reduction

14. Tensor Operations (Advanced)

- Generalization of matrices

- Broadcasting and reshaping

- Tensor contraction (einsum)

- ML Application: Deep learning layers and weights

This website uses cookies.

We use cookies to analyze website traffic and optimize your website experience. By accepting our use of cookies, your data will be aggregated with all other user data.